RESEARCH

An Analysis of Region Clustered BVH Volume Rendering on GPU

High-Performance Graphics 2019 and to appear in special issue of Computer Graphics Forum

David Ganter and Michael Manzke

Abstract — We present a Direct Volume Rendering method that makes use of newly available Nvidia graphics hardware for Bounding Volume Hierarchies. Using BVHs for DVR has been overlooked in recent research due to build times potentially impeding interactive rates. We indicate that this is not necessarily the case, especially when a clustering algorithm is applied before the BVH build to reduce leaf-node complexity. Our results show substantial render time improvements for full-resolution DVR on GPU in comparison to a recent state-of-the-art approach for empty-space-skipping. Furthermore, the use of a BVH for DVR allows seamless integration into popular surface-based path-tracing technologies like Nvidia’s OptiX.

@article{CGF38-8:013-021:2019,

journal = {Computer Graphics Forum},

title = {{An Analysis of Region Clustered BVH Volume Rendering on GPU}},

author = {David Ganter and Michael Manzke},

pages = {013-021},

volume= {38},

number= {8},

year = {2019},

note = {\URL{https://diglib.eg.org/bitstream/handle/10.1111/cgf13756/v38i8pp013-021.pdf}},

DOI = {10.1111/cgf.13756},

}

Synthesising Light Field Volumetric Visualizations in Real-time using a Compressed Volume Representation

10th International Conference on Information Visualization Theory and Applications (IVAPP 2019)

Sean Bruton, David Ganter and Michael Manzke

Abstract — Light field display technology will permit visualization applications to be developed with enhanced perceptual qualities that may aid data inspection pipelines. For interactive applications, this will necessitate an increase in the total pixels to be rendered at real-time rates. For visualization of volumetric data, where ray-tracing techniques dominate, this poses a significant computational challenge. To tackle this problem, we propose a deep-learning approach to synthesise viewpoint images in the light field. With the observation that image content may change only slightly between light field viewpoints, we synthesise new viewpoint images from a rendered subset of viewpoints using a neural network architecture. The novelty of this work lies in the method of permitting the network access to a compressed volume representation to generate more accurate images than achievable with rendered viewpoint images alone. By using this representation, rather than a volumetric representation, memory and computation intensive 3D convolution operations are avoided. We demonstrate the effectiveness of our technique against newly created datasets for this viewpoint synthesis problem. With this technique, it is possible to synthesise the remaining viewpoint images in a light field at real-time rates.

@conference{bruton_ivapp19,

author={Seán Bruton. and David Ganter. and Michael Manzke.},

title={Synthesising Light Field Volumetric Visualizations in Real-time using a Compressed Volume Representation},

booktitle={Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications - Volume 3: IVAPP,},

year={2019},

pages={96-105},

publisher={SciTePress},

organization={INSTICC},

doi={10.5220/0007407200960105},

isbn={978-989-758-354-4},

}

Using a Depth Heuristic for Light Field Volume Rendering

14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2019)

Sean Martin, Sean Bruton, David Ganter and Michael Manzke

Abstract — Existing approaches to light field view synthesis assume a unique depth in the scene. This assumption does not hold for an alpha-blended volume rendering. We propose to use a depth heuristic to overcome this limitation and synthesise views from one volume rendered sample view, which we demonstrate for an 8 × 8 grid. Our approach is comprised of a number of stages. Firstly, during direct volume rendering of the sample view, a depth heuristic is applied to estimate a per-pixel depth map. Secondly, this depth map is converted to a disparity map using the known virtual camera parameters. Then, image warping is performed using this disparity map to shift information from the reference view to novel views. Finally, these warped images are passed into a Convolutional Neural Network to improve visual consistency of the synthesised views. We evaluate multiple existing Convolutional Neural Network architectures for this purpose. Our application of depth heuristics is a novel contribution to light field volume rendering, leading to high quality view synthesis which is further improved by a Convolutional Neural Network.

@conference{martin_grapp19,

author={Martin, S. and Seán Bruton. and David Ganter. and Michael Manzke.},

title={Using a Depth Heuristic for Light Field Volume Rendering},

booktitle={Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications - Volume 1: GRAPP,},

year={2019},

pages={134-144},

publisher={SciTePress},

organization={INSTICC},

doi={10.5220/0007574501340144},

isbn={978-989-758-354-4},

}

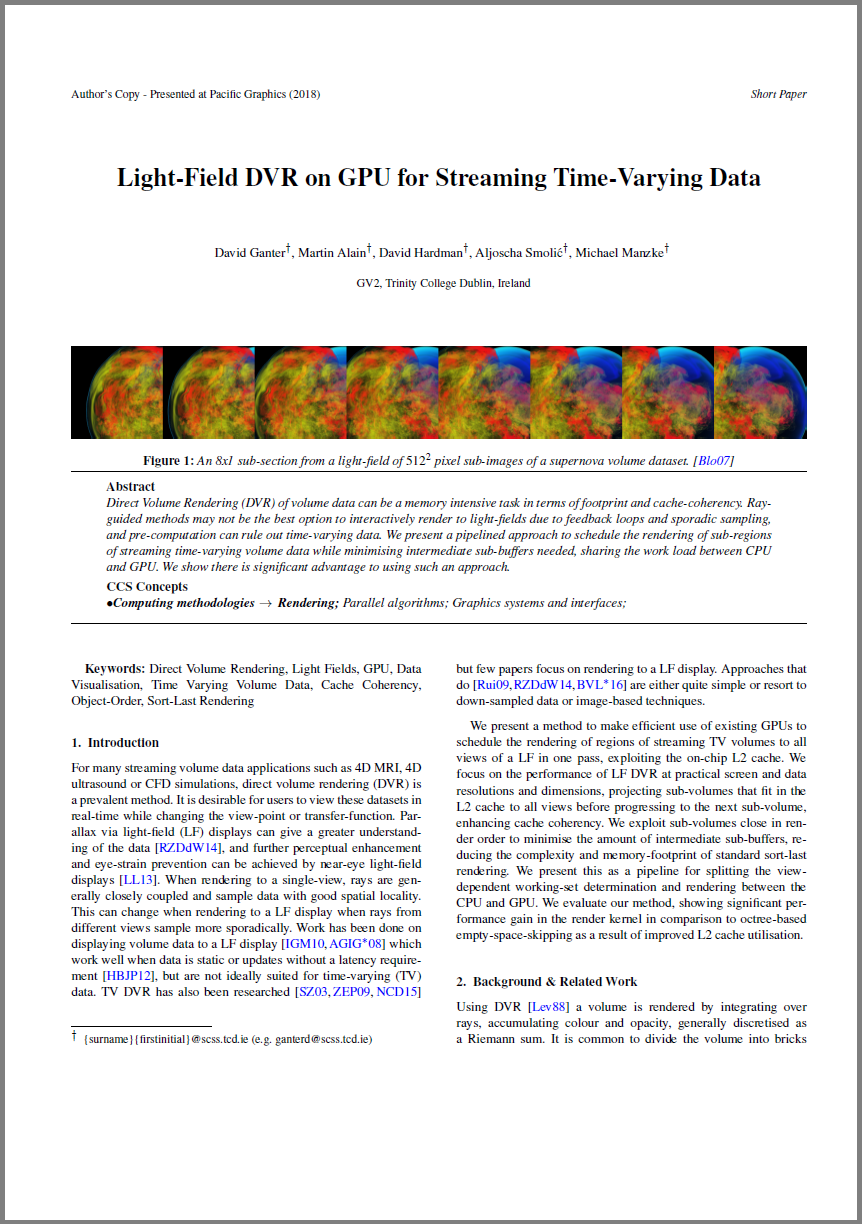

Light-Field DVR on GPU for Streaming Time-Varying Data

Pacific Graphics 2019 Short Papers

David Ganter, Martin Alain, David Hardman, Aljosa Smolic and Michael Manzke

Abstract — Direct Volume Rendering (DVR) of volume data can be a memory intensive task in terms of footprint and cache-coherency. Rayguided methods may not be the best option to interactively render to light-fields due to feedback loops and sporadic sampling, and pre-computation can rule out time-varying data. We present a pipelined approach to schedule the rendering of sub-regions of streaming time-varying volume data while minimising intermediate sub-buffers needed, sharing the work load between CPU and GPU. We show there is significant advantage to using such an approach.

@inproceedings{PG-short:069-072:2018,

crossref = {PG-short-proc},

title = {{Light-Field DVR on GPU for Streaming Time-Varying Data}},

author = {David Ganter and Martin Alain and David Hardman and Aljosa Smolic and Michael Manzke},

pages = {069-072},

year = {2018},

note = {\URL{https://diglib.eg.org/bitstream/handle/10.2312/pg20181283/069-072.pdf}},

DOI = {10.2312/pg.20181283},

}

PERSONAL PROJECTS

WebGL & Voxel Pathfinding

An exercise in WebGL and a refresher in basic AI pathfinding.